Enable access to GKE API server from disconnected VPC using Private Service Connect

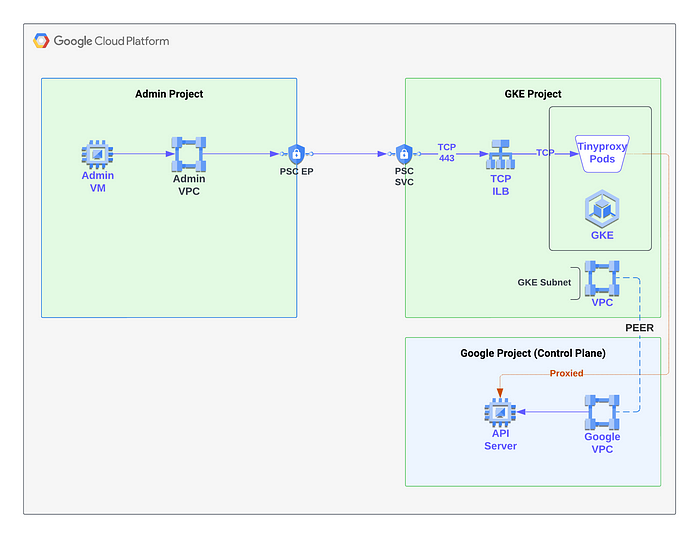

Private Service Connect (PSC) is a very creative Google Cloud Platform (GCP) feature that allows sharing of Google APIs or published services to other GCP projects via private networking. One of the main advantages of PSC is that you can access the published service with an IP address that is local to your VPC.

Alternates like VPC peering or VPN requires both connected VPCs to have unique IP address ranges and shares all connected subnets to both VPCs by default. VPC peering also restricts the sharing of other connected or peered networks to the peer (not transitive).

The Problem..

Recently, I came across a requirement for allowing access to administer and provision resources to a private GKE cluster from another GCP project. This source project is attached to a different VPC than the one where the GKE cluster is configured.

By design, the GKE Control plane including the API server IP address are in a Google VPC that is peered to the VPC where the nodes are deployed. Due to the non-transitive routing of peered VPCs, it is not possible to reach the control plane by setting up another VPC peering from the source to the GKE VPC

In our situation, there were multiple such GCP clusters that are in disconnected networks and has to be administered from a central location (project/VPC).

VPN was also not a scalable solution to address this issue, partly due to cost and management overhead.

We could also not expose the API via a public endpoint due to security regulations.

Solution

Utilizing PSC and tinyproxy, this can be implemented as follows:

Create a deployment of tinyproxy in the destination GKE cluster into a namespace named tinyproxy.

apiVersion: v1

kind: Namespace

metadata:

name: tinyproxy

labels:

name: tinyproxy

---

apiVersion: v1

kind: ConfigMap

metadata:

name: tinyproxy-cfg

namespace: tinyproxy

data:

tinyproxy.conf: |

User nobody

Group nogroup

Port 8888

Allow 10.16.0.0/24

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tinyproxy-dep

namespace: tinyproxy

spec:

replicas: 1

selector:

matchLabels:

app: tinyproxy

template:

metadata:

labels:

app: tinyproxy

spec:

containers:

- image: tinyproxy:latest

name: tinyproxy

ports:

- containerPort: 8888

protocol: TCP

volumeMounts:

- name: config

mountPath: "/etc/tinyproxy/tinyproxy.conf"

subPath: tinyproxy.conf

volumes:

- name: config

configMap:

name: tinyproxy-cfg

items:

- key: tinyproxy.conf

path: tinyproxy.confThe above code does a few things:

- Create a Namespace called tinyproxy

- Create a ConfigMap with a minimal tinyproxy configuration file. Note that I have allowed access from the subnet 10.16.0.0/24 to tinyproxy. By default tinyproxy only allows connections from 127.0.0.1 (localhost), so any attempts to connect from other networks/IP addresses will be rejected.

- Create a deployment and mount the ConfigMap to the tinyproxy container in the location /etc/tinyproxy/tinyproxy.conf

Create a GKE service of type load balancer that directs traffic to tinyproxy.

apiVersion: v1

kind: Service

metadata:

name: proxy-ilb

namespace: tinyproxy

annotations:

networking.gke.io/load-balancer-type: Internal

spec:

ports:

- name: tcp-port

port: 443

protocol: TCP

targetPort: 8888

selector:

app: tinyproxy

type: LoadBalancerPublish a PSC service attached to the ILB deployed above. You will need to create a new subnet with the purpose ‘Private Service Connect’. If such a subnet doesn’t exist, you will be prompted to create one.

Add the source project where you need to access the API server from in the Accepted projects list. The completed configuration screenshot:

In the source project, create a PSC endpoint to the published service. Choosing ‘Enable global access’ will allow this endpoint to be reachable from all regions in the source VPC.

In my configuration, the PSC endpoint was configured at IP address 10.32.0.100. By specifying the https_proxy to point to this address, kubectl commands can be run against the cluster in the VPC without direct network connectivity as shown in screenshot below:

Caveats and Considerations

- By default, this configuration allows all machines in the source VPC to connect via HTTPS to any IP address reachable by the tinyproxy instance if you know the IP address. This will need to be restricted by tinyproxy configuration and/or firewall rules in the source and destination VPCs.

- If you need to create your own container image for tinyproxy, here is a Dockerfile configuration that will create a minimal tinyproxy using an alpine image.

FROM alpine:3.20

RUN apk add --no-cache bash tinyproxy

COPY run.sh /opt/docker-tinyproxy/run.sh

EXPOSE 8888

ENTRYPOINT ["/opt/docker-tinyproxy/run.sh"]#!/bin/bash

# run.sh

exec /usr/bin/tinyproxy -d